5. Classical Learning#

5.1. 🎲 Metal or insulator?#

In life, some decisions are difficult to make. We hope that our experience informs a choice that is better than a random guess. The same is true for machine learning models.

There are many situations where we want to classify materials according to their properties. One fundamental characteristic is whether a material is a metal or insulator. For this exercise, we can refer to these as class 0 and class 1 materials, respectively. This is a binary classification problem with class labels:

(y = 0) → metal

(y = 1) → insulator

From our general knowledge, Cu should be 0 and MgO should be 1, but what about Tl2O3 or Ni2Zn4?

5.1.1. Theoretical background#

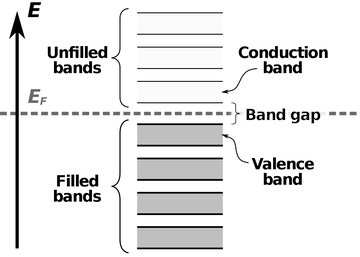

Metals are characterised by their free electrons that facilitate the flow of electric current. This arises from a partially filled conduction band, allowing electrons to move easily when subjected to an electric field.

Insulators are characterised by an occupied valence band and empty conduction band, impeding the flow of current. The absence of charge carriers hinders electrical conductivity, making them effective insulators of electricity. Understanding these fundamental differences is crucial for designing and optimising electronic devices.

In this practical, we can use the electronic band gap of a material as a simple descriptor of whether it is a metal (Eg = 0) or an insulator (Eg > 0).

This classification is coarse as we are ignoring the intermediate regime of semiconductors and more exotic behaviour such as superconductivity.

5.2. \(k\)-means clustering#

Let’s start by generating synthetic data for materials along with their class labels. To make the analysis faster and more illustrative, we can perform dimensionality reduction from a 10D to 2D feature space, and then cluster the data using \(k\)-means.

# Installation of libraries

!pip install elementembeddings==0.6.1 --quiet

!pip install matminer==0.9.3 --quiet

# Import of modules

import numpy as np # Numerical operations

import pandas as pd # DataFrames

import matplotlib.pyplot as plt # Plotting

import seaborn as sns # Visualisation

from sklearn.decomposition import PCA # Principal component analysis (PCA)

from sklearn.cluster import KMeans # k-means clustering

from sklearn.metrics import accuracy_score, confusion_matrix # Model evaluation

from sklearn.tree import DecisionTreeClassifier # Decision tree classifier

Colab error solution

If running the import module cell fails with an "AttributeError", click `Runtime` -> `Restart Session` and then simply rerun the cell.5.3. Decision tree classifier#

Let’s see if we can do better using a dedicated classifier. We will now train a decision tree to tackle the same problem and visualise the decision boundary.

# Step 0: Set the depth of the decision tree

max_tree_depth = 0

# Step 1: Train a decision tree classifier

def train_decision_tree(depth, reduced_data, labels):

tree_classifier = DecisionTreeClassifier(max_depth=depth, random_state=42)

tree_classifier.fit(reduced_data, labels)

return tree_classifier

tree_classifier = train_decision_tree(max_tree_depth, reduced_data, labels)

predicted_labels = tree_classifier.predict(reduced_data)

# Step 2: Create a plot to visualise the decision boundary of the decision tree

plt.figure(figsize=(5, 4))

# Plot the materials labeled as metal (label=1)

plt.scatter(reduced_data[labels == 1, 0], reduced_data[labels == 1, 1], c='lightblue', label='Metal')

# Plot the materials labeled as insulator (label=0)

plt.scatter(reduced_data[labels == 0, 0], reduced_data[labels == 0, 1], c='lightcoral', label='Insulator')

# Plot the decision boundary of the decision tree classifier

h = 0.02 # step size for the meshgrid

x_min, x_max = reduced_data[:, 0].min() - 1, reduced_data[:, 0].max() + 1

y_min, y_max = reduced_data[:, 1].min() - 1, reduced_data[:, 1].max() + 1

xx, yy = np.meshgrid(np.arange(x_min, x_max, h), np.arange(y_min, y_max, h))

Z = tree_classifier.predict(np.c_[xx.ravel(), yy.ravel()])

Z = Z.reshape(xx.shape)

plt.contourf(xx, yy, Z, alpha=0.5, cmap='Pastel1')

plt.xlabel('Principal Component 1')

plt.ylabel('Principal Component 2')

plt.title(f'Decision tree (max depth={max_tree_depth}) of synthetic data')

plt.legend()

plt.show()

Code hint

With no nodes, you have made an indecisive tree 🥁.Increase the value of max_tree_depth and look at the behaviour.

There should be more structure in the decision boundary due to the more complex model, especially as you increase the tree depth.

\(k\)-means clustering provides a simple way to group materials based on similarity, yielding a clear piecewise linear decision boundary. On the other hand, the decision tree classifier does better in handling non-linear separations. It constructs a boundary based on different feature thresholds, enabling it to capture fine-grained patterns. As always in ML, there is a balance of trade-offs between simplicity and accuracy.

Is the decision tree more accurate? Let’s see.

# Step 3: Quantify classification accuracy

accuracy = accuracy_score(labels, predicted_labels)

conf_matrix = confusion_matrix(labels, predicted_labels)

print("Accuracy:", accuracy)

print("\nConfusion Matrix:")

print(conf_matrix)

If you choose a large value for the tree depth, the decision tree will approach a perfect accuracy of 1.0. It does this by memorising (overfitting) the training data but is unlikely to generalise well to new (unseen) data, i.e. overfitting. In contrast, the accuracy of \(k\)-means clustering is lower because it is an unsupervised algorithm designed for clustering, not classification. Its performance depends on the data structure and the presence of distinct clusters in that feature space.

5.4. Real materials#

We can save time again by making use of a pre-built dataset. We will return to matminer, which we used before, and load matbench_expt_is_metal.

5.4.1. Load dataset#

import matminer

from matminer.datasets.dataset_retrieval import load_dataset

# Use matminer to download the dataset

df = load_dataset('matbench_expt_is_metal')

print(f'The full dataset contains {df.shape[0]} entries. \n')

# Display the first 10 entries

df.head(10)

Code hint

To load a different dataset, you simply change the name in 'load_dataset()'.5.4.2. Materials featurisation#

Revisiting concepts from earlier Notebooks, featurising the chemical compositions is necessary to create a useful set of input vectors. This allows the presence (or absence) of an element (or element combinations) to act as a feature that the classifier takes account for.

We will use ElementEmbeddings to featurise the composition column. The importance of the pooling method can be tested by generating two sets of features. In the first, the mean of the atomic vectors is used, while in the second, a max pooling method takes the maximum value of each component across all the atomic vectors in the composition.

# Featurise all chemical compositions

from elementembeddings.composition import composition_featuriser

# Compute element embeddings using mean and max pooling

mean_df = composition_featuriser(df["composition"], embedding="magpie", stats=["mean"])

max_df = composition_featuriser(df["composition"], embedding="magpie", stats=["maxpool"])

# Convert "is_metal" column to integer labels (0, 1)

df['is_metal'] = df['is_metal'].astype(int)

mean_df['is_metal'] = df['is_metal']

max_df['is_metal'] = df['is_metal']

# Define feature matrices and target variable

cols_to_drop = ['is_metal', 'formula']

X_mean = mean_df.drop(columns=cols_to_drop, errors='ignore').values

X_max = max_df.drop(columns=cols_to_drop, errors='ignore').values

y = df['is_metal'].values # Target variable

# Preview first two rows

print("Mean pooling features (first two rows, first 4 columns):")

print(mean_df.iloc[:2, :4])

print("\nMax pooling features (first two rows, first 4 columns):")

print(max_df.iloc[:2, :4])

In the output, you can see two numerical representations of the chemical compositions using different feature extraction techniques. Now let’s see how they cluster.

5.4.3. \(k\)-means clustering#

5.4.3.1. Mean pool#

# Perform k-means clustering

kmeans = KMeans(n_clusters=2, random_state=42)

predicted_labels = kmeans.fit_predict(X_mean)

# NOTE: In k-means, the label '0' or '1' is arbitrary — the algorithm just names clusters.

# If accuracy < 0.5, it means the cluster IDs are reversed compared to our target labels,

# so we flip them here for easier comparison. It's not cheating :)

if accuracy_score(y, predicted_labels) < 0.5:

predicted_labels = 1 - predicted_labels

# Assess performance

accuracy = accuracy_score(y, predicted_labels)

print(f"Accuracy: {accuracy:.2f}")

conf_matrix = confusion_matrix(y, predicted_labels)

plt.figure(figsize=(5, 4))

sns.heatmap(conf_matrix, annot=True, fmt="d", cmap="Blues",

xticklabels=['Predicted Insulator', 'Predicted Metal'],

yticklabels=['True Insulator', 'True Metal'])

plt.xlabel('Predicted label')

plt.ylabel('True label')

plt.show()

5.4.3.2. Max pool#

# Perform k-means clustering

kmeans = KMeans(n_clusters=2, random_state=42)

predicted_labels = kmeans.fit_predict(X_max)

# Adjust k-means output to match true labels

if accuracy_score(y, predicted_labels) < 0.5:

predicted_labels = 1 - predicted_labels

# Assess performance

accuracy = accuracy_score(y, predicted_labels)

print(f"Accuracy: {accuracy:.2f}")

conf_matrix = confusion_matrix(y, predicted_labels)

plt.figure(figsize=(5, 4))

sns.heatmap(conf_matrix, annot=True, fmt="d", cmap="Blues",

xticklabels=['Predicted Insulator', 'Predicted Metal'],

yticklabels=['True Insulator', 'True Metal'])

plt.xlabel('Predicted label')

plt.ylabel('True label')

plt.show()

The difference in accuracy between the two methods for this simple example highlights the importance of choosing an appropriate pooling strategy when featurising materials data. Which pooling better matches linear separability assumptions in k‑means, and why?

5.5. 🚨 Exercise 5#

5.5.1. Your details#

import numpy as np

# Insert your values

Name = "No Name" # Replace with your name

CID = 123446 # Replace with your College ID (as a numeric value with no leading 0s)

# Set a random seed using the CID value

CID = int(CID)

np.random.seed(CID)

# Print the message

print("This is the work of " + Name + " [CID: " + str(CID) + "]")

5.5.2. 🧪 Problem: Comparing Decision Tree Classifiers#

We will test how feature engineering influences the performance of a decision tree classifier on real materials data.

Tasks will be given in class.

#Empty block for your answers

#Empty block for your answers

#Empty block for your answers

.ipynb. The completed file should be uploaded to Blackboard under assignments for MATE70026.

5.6. 🌊 Dive deeper#

Level 1: Tackle Chapter 6 on Linear Two-Class Classification in Machine Learning Refined.

Level 2: Play metal detection. Note, the website can be a little temperamental.

Level 3: Dig deeper into the options for definitions decision trees and ensemble models in scikit-learn.